We show our real-time demo applied to a several scenes at 512x512 pixel resolution.

Datasets. We use 2 scenes from each of: Technicolor, Google Immersive, Shiny, Stanford, and DoNeRF

Details. We use our Small model for the Technicolor and Shiny scenes, so that the frame-rate for rendering is >40 FPS, demonstrating that we can achieve very high visual quality at high-frame rate, even without custom CUDA code. For the remaining datasets, we use our full model, which still provides real-time inference.

We show free view synthesis results on all scenes from the Technicolor dataset.

We show free view synthesis results on all scenes from the Neural 3D Video dataset.

Note that the attached renderings are from 50 frame video clips of the original full length sequences, due to supplemental space constraints (though all of our quantitative metrics are calculated using the full length videos).

We show free view synthesis results on all scenes from the Google Immersive dataset.

Note that the attached renderings are from 50 frame video clips of the original full length sequences, due to supplemental space constraints (though all of our quantitative metrics are calculated using the full length videos).

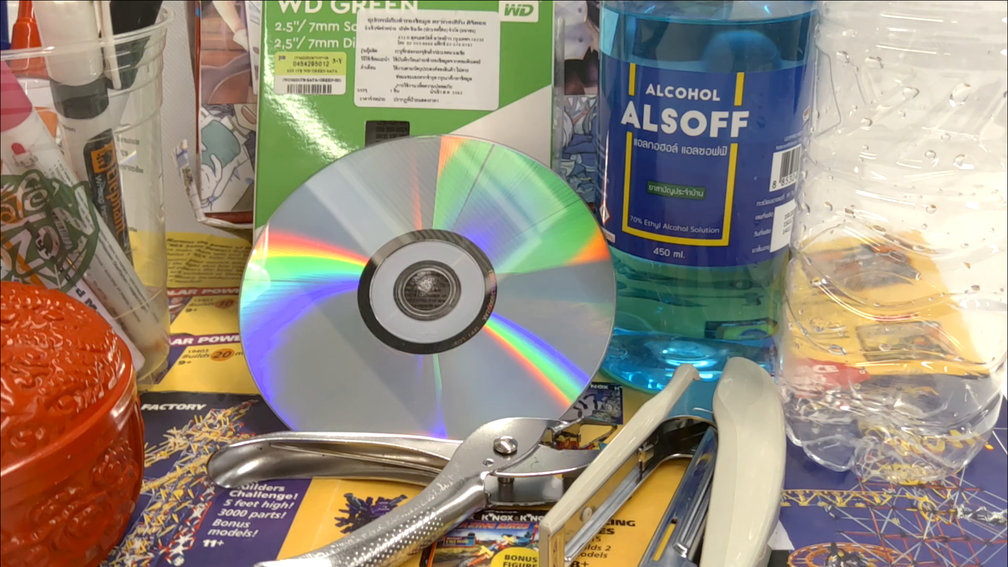

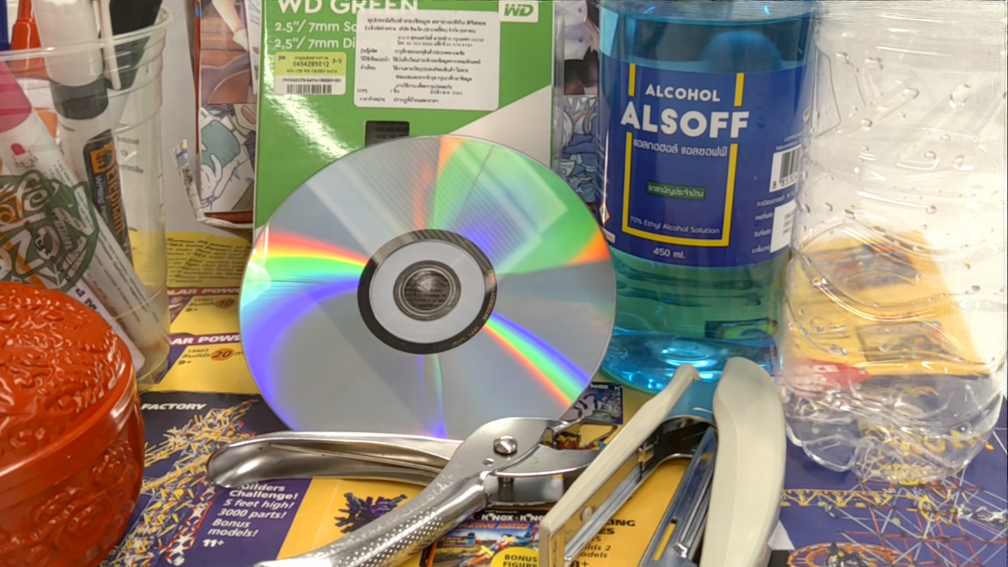

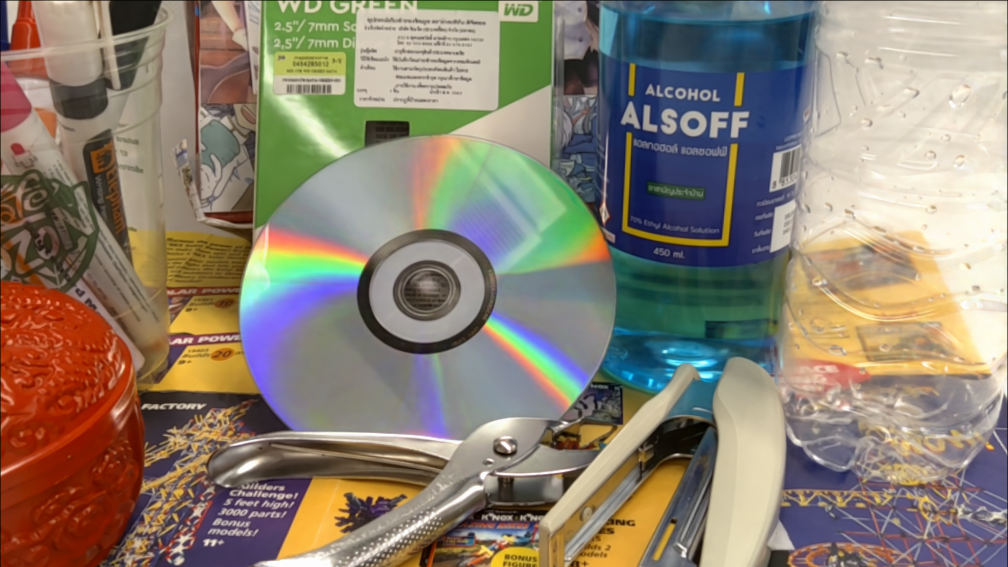

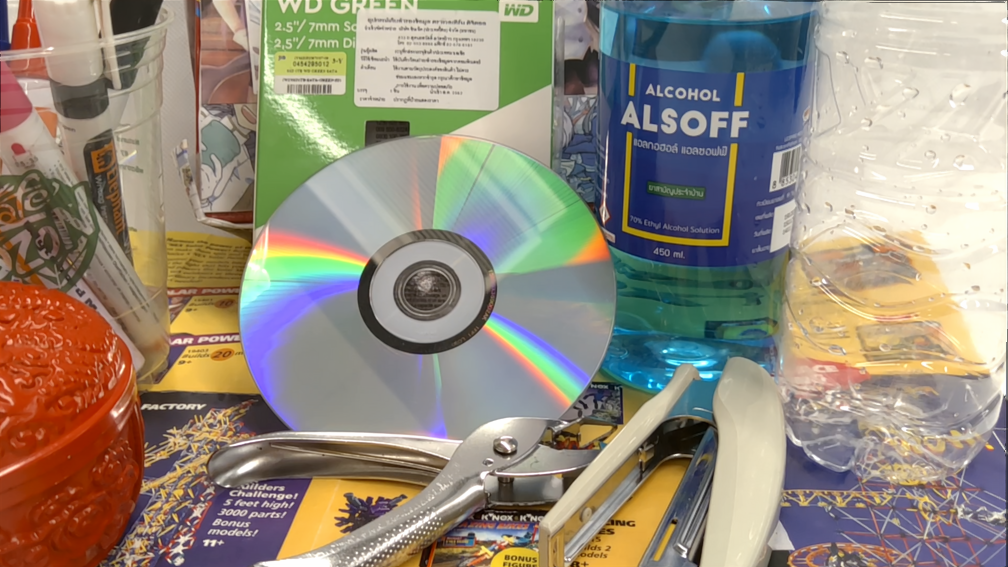

We show free view synthesis results on 4 highly view-dependent scenes containing both challenging reflections and refractions.

Datasets. We use 2 scenes from the Shiny Dataset—CD and Lab— and two from the Stanford dataset—Tarot (Small) and Tarot (Large).

We provide qualitative comparisons to other static view synthesis methods on highly view-dependent scenes.

Datasets. We use 2 scenes from the Shiny Dataset—CD and Lab.

Baselines. We better reproduce reflections and refractions than both NeRF and NeX, while performing comparably to Light Field Neural Rendering [Suhail et al. 2022] in terms of quality.

Our method can also produce renderings of challenging content at real-time rates (see our demo), while Light Field Neural rendering [Suhail et al. 2022] takes 60 seconds to render an 800x800 image on a V100 GPU.

We provide qualitative comparisons to Google Immersive Light Field Video [Broxton et al. 2022].

We take screenshots from Google Immersive's high-resolution web-viewer, as there is no easy way to render specific viewpoints.

Our method provides comparable visual quality at a lower memory cost, while also training in hours rather than days.